Reservoir sampling with few random bits

Assume you are Nisus, the “one cross each” guy in Monty Python’s “Life of Brian” movie. Condemned prisoners file past you one by one, on their way to crucifixion. Now it happens that this particular day, feel both both benevolent and very indecisive. Because you feel benevolent, you want to liberate one of the condemned. But because you feel indecisive, you don’t want to pick the lucky one yourself, so you have to leave it to chance. And you don’t want anybody to have grounds to to complain, so you want to give each prisoner exactly the same chance.

This would be a relatively simple task if you knew the number of prisoners, n, ahead of time: Pick a random number k ∈ {1, …, n}, count while you hand our the crosses, and tell the kth prisoner to get lost.

But alas, the Romans judiciary system is big and effective, and you have no idea how many prisoners will emerge from the dungeons today. So you might plan to just let them all wait next to you while you count them (to figure out what n is), but that won’t work either: There is not enough space to have them waiting, and some might try to escape in the confusion before you are done counting. So in fact, you can only have one prisoner waiting at a time. As soon as the next one comes, you have to send one of them towards execution.

This is still a solvable problem, and the solution is Reservoir sampling: You let the first prisoner wait. When the second one comes, you pick one of them with probability ½, and let him wait. When the third one comes, with probability ⅔ you send him on, and with probability ⅓ to let him wait. The fourth is sent forth with probability ¼ and kept waiting with probability ¼. And so on. When finally the dungeon master tells you that all prisoners have emerged, whoever is waiting next to you is the lucky guy.

Before you do that, you should convince yourself that this is fair: The kth prisoner is the lucky one if you let him wait and from then on, all other prisoners are sent forth. The probability of this happening is ¹ ⁄ ₖ ⋅ ᵏ ⁄ ₖ₊₁ ⋅ ᵏ⁺¹ ⁄ ₖ₊₂ ⋅ ⋯ ⋅ ⁿ⁻¹ ⁄ ₙ which, after a number of cancellations, resolves to ¹ ⁄ ₙ. Because k does no longer show up in this calculation, the probability is the same for everybody, and hence fair.

How to not make a decision

So far so good. But the story misses an important point: Where do you get your randomness from? Just picking randomly is hardly random. Luckily, you just have been given your guerdon, and you have a Roman coin in your pocket. Let us assume the coin is perfectly fair: With probability ½ it shows the head of Libertas, and with probability ½ a bunch of horses. It is easy to use this coin to distinguish between the first and second prisoners, but when the third comes out, things get hairy.

You can try to throw the coin many times; say, 10 times. This has 210 = 1024 outcomes, which we can interpret as a number between 1 and 1024. If you give the third prisoner his freedom when this number is between 1 and 341, and you give him a cross if the number is between 342 and 1024, then you made pretty fair call. Not yet fully satisfying, but let’s go with it for now.

There is, however, another problem with this: You have to throw the coin a lot of times: For each prisoner, you toss it 10 times! This is too slow, and the plebs will get impatient. And it clearly is wasteful. The great philosopher Shannonus taught you that throwing the coin ten times gives you 10 bits of entropy, but you actually need only −(⅓ ⋅ log (⅓) + ⅔ ⋅ log (⅔)) = 0.918… bits to make this ⅓-⅔ decision. We should use those left-over 9.81 bits! How do we do that?

Unreal real randomness

To come up with a better (and maybe even optimal) plan, imagine for a moment that you had a source of randomness that gives you a real real number in the range (0, 1), uniformly. Clearly, that would solve your problem, because when prisoner k emerges, you can draw a random number r and let this prisoner wait if r < ¹ ⁄ ₖ.

But you don’t actually have to draw a random number more than once: It suffices to pick a single r ∈ (0, 1) at the very beginning. You just have to be a bit cleverer when deciding whether to keep the kths prisoner next to you. Let’s go through it step by step:

When Augustus, the first prisoner, comes out, you simply keep him.

When Brutus, the second prisoner, comes out, you keep him with probability ½. You do that if r < ½. You also start some bookkeeping, by remembering the range of r that has led to this outcome; in this case, the range is (0, ½). If you picked Augustus, the range is (½, 1).

Now Claudius comes out, and you want to keep him with probability ⅓. If the range that you remembered is (0, ½), you keep him if r < ⅙. Similarly, if the range is (½, 1) you keep him if r < ½ + ⅙ = ⁴ ⁄ ₆.

The currently remembered range is now (⁴ ⁄ ₆, 1) if Augustus is waiting, (⅙, ½) if Brutus is waiting and either (0, ⅙) or (½, ⁴ ⁄ ₆) if Claudius is waiting. Notice that the ranges are not all of the same size, but if you add the lengths of each prisoner’s range, you have ⅓, which means that every prisoner has the same chance to be waiting so far.

Finally, Decimus shows up. You repeat this procedure: Look at the current interval; if r is in the left quarter of it, Decimus stays, otherwise he leaves.

I tried to visualize this, and came up with this. Pick r, locate the right spot on the x axis, and you can read off, by going down, who is waiting next you.

Back to using coins

With this strategy in mind, we can go back to using a coin. Now, a single coin flip does not give us r. 1000 coin flips don’t either. If we could flip the coin an infinite number of times, yes, then we could get r out of this: The first coin flip decides the first bit after the decimal binary point: Head is 0, horses are 1. The second the second bit. And so on.

But note that you don’t need to know precisely r. To decide whether Augustus or Brutus stay, you only need to know if r is in the left or the right half – and you know that after the first coin flip. You can also tell whether Claudius stays as soon as you threw the coin often enough to determine if r < ⅙. This might already be the case after a single coin throw (if it shows horses), or after a few more. The likelihood that you can’t decide with certainty whether r < ⅓ goes down exponentially, so with probability 1, you will come to a conclusion eventually.

And the good thing is: If you were unlucky and had to throw the coin very often, then you learned a lot about r, and you can decide the next few prisoners without throwing a coin at all! In this sense, we managed to use “left-over entropy”.

And the other good thing is: There are no rounding errors any more. Every prisoner has the same chance to be freed.

A visualization

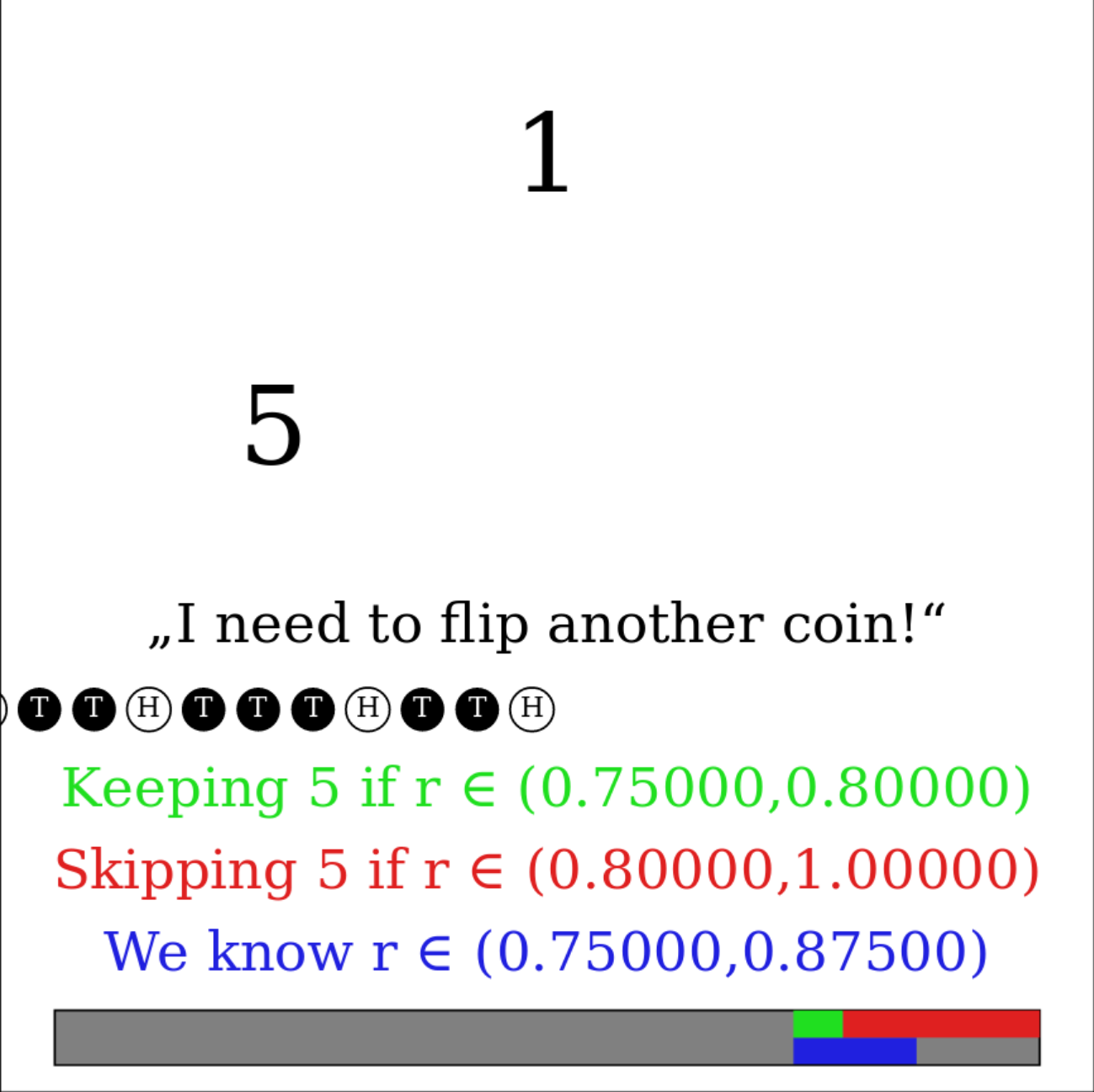

I tried to visualize the whole story, using CodeWorld. In this not very pretty animation, you see the prisoners (so far just simple numbers) coming from the left. Nisus (not shown) either lets them wait in the slot in the top, or sends them to the right. Sometimes he needs to toss a coin. Below you can see, in numbers and in a bar, what ranges of r are interesting: In green, the range which indicates that the current prisoner can stay, in red the range where he has to go, and in blue the range that r is known so far.

Probably, by the time you have read this until now, the animation is not at the beginning any more. Move the mouse over it, and you will see controls in the lower left corner; the first button resets the animation. You can also check out the source code.

Open questions

So Nisus is happy, because with few coin tosses, he can fairly pick a random prisoner to free. I am not fully satisfied yet, because of two option questions:

- Is this algorithm optimal? (And what does it mean to be optimal?)

- What is the expected number of coin throws for n prisoners? Does this number converge to log (n), which is the entropy of the result?

If you know the anwers, let me know!

Related ideas

Brent Yorgey pointed me to a sequence of blog posts by Jeremy Gibbons, on “Arithmetic Coding”, which use a similar structure of dividing the unit intervals.

Comments

Lower Bound

Let n ∈ {2, 3, …}, and consider an algorithm that generates independent random variables X2, X3, …, Xn with distributions Ber(1/2), Ber(1/3), …, Ber(1/n), respectively, from fair coin flips. By Knuth and Yao (1976) or Cover and Thomas, Section 5.11, the expected number of coin flips satisfies

E[#coin flips] ≥ H(X2, X3, …, Xn),

where the entropy is measured in bits. This lower bound is of course also valid for the procedure described in the blog post.

Upper Bound

Let n ∈ {2, 3, …}. By Knuth and Yao, there exists an algorithm satisfying

E[#coin flips] < H(X2, X3, …, Xn) + 2.

Unfortunately, the procedure described in the blog post does not necessarily satisfy that bound because is not optimal for fixed n. This can be seen from the attached “generating tree” of the procedure for n = 5 (start at the root; move to the left child if you observe heads; move to the right child if you observe tails; stop if you encounter a leaf; the leaf represents the sequence (X2, X3, X4, X5) generated from the coin flips in hexadecimal notation). Observe that for example “3” appears twice at depth 5, which can be argued to be strictly suboptimal. (For an optimal algorithm, any sequence would appear at most once at any depth.)

However, it turns out that the situation is not too bad: at any depth, any (X2, X3, X4, X5) sequence appears at most twice. This is because of the interval structure of your procedure, and can be shown to hold for any n ∈ {2, 3, …}. Omitting the details, the result by Knuth and Yao can be adapted to show that your procedure satisfies

E[#coin flips] < H(X2, X3, …, Xn) + 3.

Thus, the following result is obtained:

Performance

For any fixed n ∈ {2, 3, …}, your procedure needs on expectation at most three more coin flips to generate (X2, X3, …, Xn) than an optimal algorithm that is allowed to depend on n.

Asymptotics

Because X2, X3, …, Xn are independent,

H(X2, X3, …, Xn) = Hb(1/2) + Hb(1/3) + ⋯ + Hb(1/n),

where Hb denotes the binary entropy function. Consequently, it is not difficult to show that

H(X2, X3, …, Xn) = log2n + ∑k = 2…n(1/k) ⋅ log2(k − 1).

Asymptotically, this behaves like (ln n)2/(2 ⋅ ln 2), i.e.,

limn → ∞(H(X2, X3, …, Xn))/((ln n)2/(2 ⋅ ln 2)) = 1.

References

D. E. Knuth and A. C. Yao. The complexity of nonuniform random number generation. In Algorithms and Complexity: New Directions and Recent Results, 1976.

T. M. Cover and J. A. Thomas. Elements of Information Theory, 2nd. ed., 2006.

Cheers,

Christoph

Have something to say? You can post a comment by sending an e-Mail to me at <mail@joachim-breitner.de>, and I will include it here.

I recently enjoyed reading your post “Reservoir sampling with few random bits”. I think I have a partial answer to one of the open questions.

I believe that the expected number of coin tosses can’t get close to log n. Unfortunately this is for entropy reasons, and I have no idea how many coins are actually needed!

The process described doesn’t just choose one person, but chooses a sequence of people, and saves the last one picked. Thus, a better lower bound for the number of coin tosses is not the entropy of uniform selection from [n], but rather the entropy of selecting S ⊂ [n]. This grows as log (n) + C ⋅ log (n)2, for some C between ¼ and ½.

A few details on the derivation follow: This S is distributed as P(S) = ∏k ∈ S1/k ⋅ ∏k ∉ S(1 − 1/k). Looking at the entropy, the coefficient of log (k) is ∑S ∋ kP(S) = 1/k, and that of log (k/(k − 1)) is 1 − 1/k. Thus the entropy is H(n) = ∑k = 1...n1/k ⋅ log (k) + (1 − 1/k) ⋅ log (k/(k − 1)). This telescopes to H(n) = log (n) + ∑k = 2..n − 11/(k + 1) ⋅ log (k). Since ∑k = 1...n1/k ⋅ log (k) 1/2 ⋅ log (n)2, we get the result.

Thanks for the interesting read! Ben